Single-Photon Data

Robust 3D imaging, sensor fusion, event processing, depth and intensity restoration, underwater 3D imaging

Robust Lidar Imaging

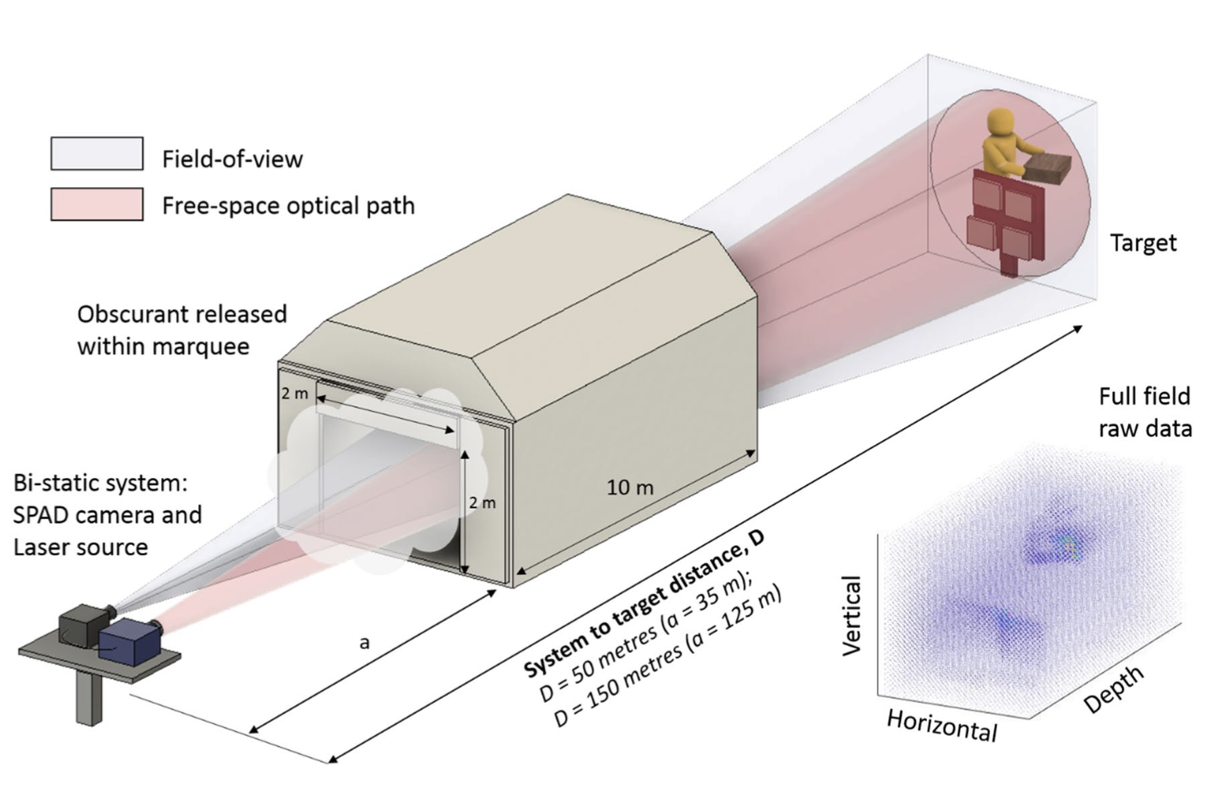

Single-photon LiDAR systems use single-photon avalanche diode (SPAD) detectors to capture 3D scene information from very few detected photons. Our work focuses on developing robust algorithms that can reconstruct accurate 3D images even in challenging conditions such as high background illumination, low photon counts, and the presence of obscurants.

Underwater Lidar

Underwater 3D imaging presents unique challenges due to scattering and absorption effects. We develop statistical models and algorithms specifically designed to handle the complex photon propagation in underwater environments, enabling robust depth estimation and target detection through turbid water.

Multi-Spectral Lidar

Multi-spectral single-photon LiDAR combines depth imaging with spectral information, enabling simultaneous 3D reconstruction and material classification. Our research develops joint processing algorithms that exploit correlations across spectral channels for improved reconstruction quality.

Multi-modal Imaging

Multi-modal approaches combine single-photon data with other imaging modalities to achieve enhanced reconstruction quality. We develop sensor fusion algorithms that leverage complementary information from different sensors, including RGB cameras and event-based cameras, to improve depth estimation and scene understanding.

Related Publications

- A. Halimi, R. Tobin, A. McCarthy, S. McLaughlin, and G. S. Buller, "Robust restoration of sparse multidimensional single-photon LiDAR images," IEEE Trans. Computational Imaging, 2020.

- A. Halimi, A. Maccarone, R. A. Lamb, G. S. Buller, and S. McLaughlin, "Robust and guided Bayesian reconstruction of single-photon 3D LiDAR data: Application to multispectral and underwater imaging," IEEE Trans. Computational Imaging, 2021.

- A. Ruget, S. McLaughlin, R. K. Henderson, I. Gyongy, A. Halimi, and J. Leach, "Real-time 3D reconstruction from single-photon lidar data using plug-and-play point cloud denoisers," Nature Communications, 2021.

- A. Ruget, M. Sheridan, S. McLaughlin, I. Gyongy, A. Sheridan, A. Halimi, and J. Leach, "Pixels2Pose: Super-resolution time-of-flight imaging for 3D pose estimation," Science Advances, 2022.

- A. Halimi, A. Ruget, J. Leach, and S. McLaughlin, "Bayesian unrolling for single-photon 3D imaging," in Proc. IEEE ICASSP, 2022.

- S. Chen, A. Halimi, X. Ren, A. McCarthy, X. Su, S. McLaughlin, and G. S. Buller, "Learning non-local spatial correlations to restore sparse 3D single-photon data," IEEE Trans. Image Processing, 2019.

- A. Halimi, Y. Altmann, A. McCarthy, X. Ren, R. Tobin, G. S. Buller, and S. McLaughlin, "Restoration of intensity and depth images constructed using sparse single-photon data," in Proc. IEEE EUSIPCO, 2016.